Hola, estos días hemos estado en las JBI2015 en Madrid, en la Escuela de Ingenieros Industriales, más de 300 personas. Tras el 1er congreso de la SEBiBC el año pasado teníamos ganas de más. La verdad es que lo pasé muy bien y disfruté de volver a ver a tantos colegas de ruta. Ya sabemos que el siguiente congreso será el 2o de la SEBiBC el 11-13 de noviembre de 2026.

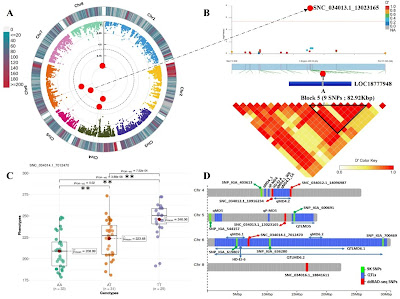

Por parte de nuestro labo fuimos Joan Sàrria y yo con dos pósters:

Dejo aquí mis notas de las charlas y pósters que pude atender, mezclando inglés y español.

Roser Tormo (Sanger Institute) talks about cell genomics with a focus on the female reproductive system, see

bioinfoperl.blogspot.com/2024/10/notas-1er-congreso-SEBiBC.html. Quieren reconstruir el proceso de foliculogénesis en el labo e identificar los principales TFs y enhancers que controlan el ciclo.

Mikel Hernáez (CAM-UNAV) "Uncovering Functional IncRNAs by scRNA-se with ELATUS". Cuenta que pseudoalineamientos son más sensibles que mapeos por alineamiento para cuantificar lncRNAs (kallisto > salmon > STAR > Cell Ranger). Programan ELATUS partiendo de Kallisto, que en modo single-cell descarta los multimapeos, pero tiene más FP que Cell Ranger; artículo: https://doi.org/10.1038/s41467-024-54005-7

Carolina Monzó (I2SysBio-CSIC) "Quality assessment of long read data in multisample lrRNA-seq experiments with SQANTI-reads". Good quality human transcriptome, 73% of known transcripts, 19% novel, with higher depth providing more unknown exon junctions. After tests in several species they still cannot figure the min depth to saturate transcript models. Artículo: https://doi.org/10.1101/gr.280021.124

Daniel López-López (FPS) "The Spanish Polygenic Score (PGS) reference distribution: a resource for personalized medicine". Imputan y asignan fase de variantes en 2.2K muestras. Artículo: https://doi.org/10.1038/s41431-025-01850-9. PGS se están usando en mejora también para calcular breeding values.

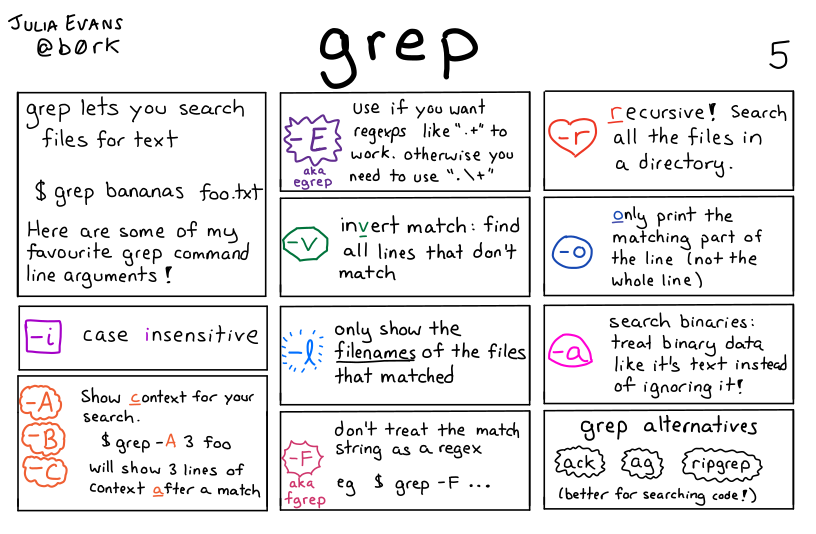

Jacob Fernández Isa en su póster usa aritmética de kmeros (https://github.com/refresh-bio/KMC) para definir marcadores centroméricos y detectar recombinación en polen.

Graciela Uria, (IIS-FJD, UAM) Dissecting the functional landscape of rare diseases. They identify new pathogenic variants which are validated by segregation analysis on pacient pedigree data.

Raquel Blanco Martinez-Illescas (IRB) "Sex and smoking influence the clonal structure of the normal human bladder". Charla preciosa, que le valdría el premio a mejor presentación, donde mostraba cómo descubrió 4 genes que explican porqué los hombres y fumadores tienen más prevalencia de cáncer de vejiga. Se trata en realidad de selección natural a nivel de tejido, y de genes que evolucionan más rápido. El artículo es https://pubmed.ncbi.nlm.nih.gov/41062697

Tim Hubbard. Charla larga, resume su perspectiva de cómo las cosas están cambiando en bioinformática con el ejemplo de los servicios de ELIXIR (visibility, robustness, ease of use) y cómo ahora, además de datos generados en la investigación tenemos cada vez acceso a datos que se obtienen de manera rutinaria en el día a día.

Pablo Villoslada-Blanco (CNIO) "Virome Shifts in Pancreatic Ductal Adenocarcinoma: New Insights from Untargeted Metagenomics". In phaeces and saliva 5% reads are viruses, with 98% being phages that target particular bacteria taxa (lore specific than antibiotics). So far only DNA sequenced, not RNA.

Alberto Pascual García (CNB-CSIC) "Novel computational tools for high-throughput design and analysis of microbial consortia". Diapos con beamer latex. Tratan de modelar el metabolismo de comunidades bacterianas definiendo gremios. Hacen reacciones con bacterias en vez de enzimas. Las comunidades son mejores que cepas a la hora de expandir nichos. La idea es diseñar comunidades. Faltan experimentos y modelos metabolicos con estequiometria, transportadores no anotados. Código: https://github.com/sirno/misosoup. Artículo más reciente: https://doi.org/10.1038/s41467-025-57591-2

Adrián López-García, (CBGP UPM, INIA-CSIC) "Beyond taxonomy: global patterns of gene family abundances reveal functional ecological drivers in soil microbiomes". Prueban deep homolog clustering (de A Rodríguez del Río) para asignar funciones a ORFs. Usan datos de un estudio previo. Encuentran muchas familias de genes sin anotar que se asocian a características del suelo (humedad, acidez y mat org).

Daniel Rico (CABIMER) "Evolutionary analysis of gene ages across TADs associates chromatin topology with whole-genome duplications". Looking at vertebrates mostly, discovered TADs encompass genes of similar age. Gene age is measured using WGDs. Old TADs are more expressed, essential and stable across tissues. Artículo: https://doi.org/10.1016/j.celrep.2024.113895

Coral del Val (UGR) "Gene expression networks regulated by human personality". Found core regulatory module in brain, with 3 mammal miRNAs, that are associated to personality. They use blood RNAseq data intersected with brain atlas. Encuentran indicios de molecular condensates como en orgánulos. They work with sets if personality, with genetics explaining over 60%.

Sonia Tarazona (UPV) "MORE interpretable multi-omic regulatory networks to characterise phenotypes". Produce resultados fáciles de interpretar, usan análisis multivariante interpretable, no AI. El algoritmo PLS es el más equiilibrado en simulación, puedes poner o no datos previos (vínculos de regulación conocidos, pero eso de momento significa que posibles interacciones desconocidas no se descubrirán). Usan expresión génica como target value, el resto de ómics para modelarla. Encuentran hubs y global regulators. Sus datos son vectores de números y datos categóricos.

https://doi.org/10.1093/bib/bbaf270 y https://www.biorxiv.org/content/10.1101/421834v3

Andreia Salvador (UMinho) presenta "MOSCA 2.0: A bioinformatics framework for metagenomics, metatranscriptomics and metaproteomics data analysis and visualization" y de paso https://github.com/iquasere/KEGGCharter , muy descargadas en bioconda.

Cedric Notredame (CRG) da una conferencia sobre "Feeding Hungry AI with Evolution-Augmented Data: Alignments, Phylogenies, and Next Gen Pipelines". En realidad habla de dos cosas: i) agregar información evolutiva (y estructural) mejora los alineamientos múltiples de secuencias (MSA). Da como ejemplo los resultados de Alphafold > ESMfold y luego muestra cómo los algoritmos de MSA escalan mal y se atragantan con los volúmenes de secuencia actuales, razón por la que en colaboración con colegas polacos han desarrollado https://github.com/refresh-bio/FAMSA. Muestra también varios ejemplos de cómo conocer la estructura 3D de las proteínas ayuda estimar con más precisión las distancias entre ellas (filogenias) dado que la estructura está más conservada y tarda más en saturar que las secuencias, definiendo la twilight zone. Menciona muy de pasada que en trabajo con MSA ahora están usando embeddings (inmersiones en español), mejores que la identidad de secuencia para predecir proteínas que reconocen moléculas de ARN. Más en general, habla con mucho entusiasmo de cómo la IA ha convertido los datos en aditivos de manera que todos los que vayamos generando mejorarán las predicciones futuros y pone como ejemplo central AlphaFold. Desde ahora ya podemos decir que necesitamos más datos de verdad, porque se ha demostrado en este y otros problemas que grandes cantidades de datos permiten resolver problemas irresolubles. Finalmente, habla un buen rato de cómo para calcular grandes volúmenes de datos en su labo inventaron https://www.nextflow.io, que se ha acabdo convirtiendo en el entorno de producción de instituciones como Sanger o el EBI por asegurar reproducibilidad. Ahora es el editor jefe de https://academic.oup.com/journals/pages/nar_genomics_and_bioinformatics e invita a que enviemos artículos sobre protocolos reproducibles. Le preguntan si creen, como T Hubbard, si abandonaremos la línea de comando para hacer programación gráfica; contesta con alusiones a Access, donde era imposible hacer dos veces lo mismo con tantos clicks, y termina diciendo que para obtener procedimientos reproducibles seguiremos usando comando en texto.

Hasta pronto!